SUMMARY

- The US Agency for International Development (USAID)’s Center on Democracy, Human Rights, and Governance (DRG) created an internal “Disinformation Primer” that revealed the agency’s explicit praise for private sector censorship strategies and proposed additional censorship practices and techniques.

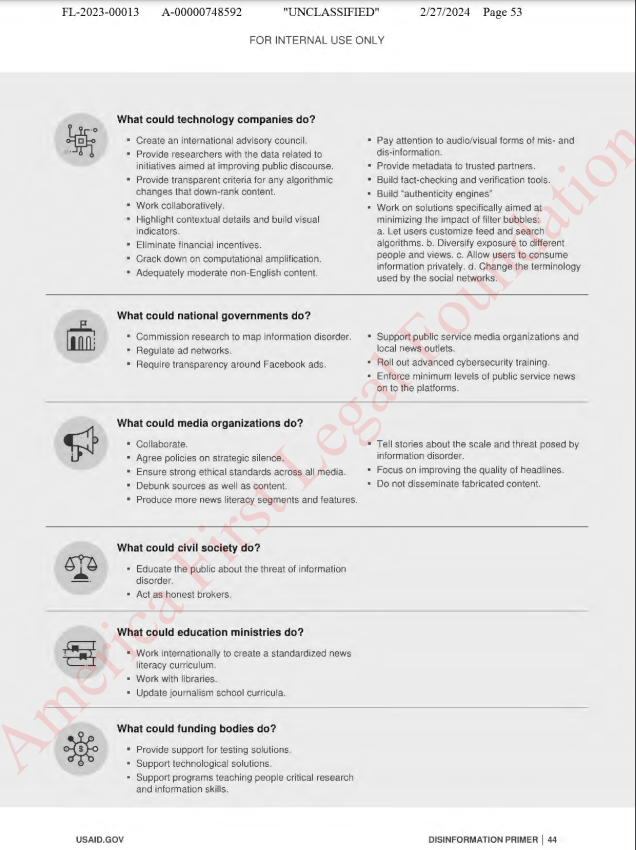

- USAID’s censorship proposals were aimed at influencing private sector technology companies, media organizations, education ministries, national governments and funding bodies.

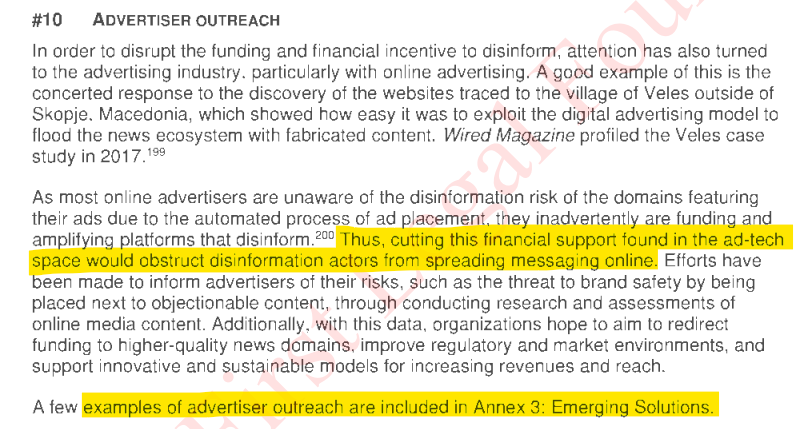

- USAID endorsed “Advertiser Outreach” for the purpose of getting corporate advertisers to financially throttle disfavored media sources and social media accounts.

- USAID recommended Google’s Redirect Method and “prebunking” (i.e., psychological inoculation) as potential solutions to stop the erosion of traditional media influence over citizen hearts and minds.

- USAID proposed targeting gamers and gaming sites, pushing the need to censor their formation of “interpretations of the world that differ from ‘mainstream’ sources” and interrupting the process by which “individuals contribute their own ‘research'” to collectively form their own “populist expertise.”

USAID — the foreign-facing wing of the federal government that purports to use taxpayer dollars for “strengthening resilient democratic societies” and exporting American democratic ideals abroad — has been quietly pushing private sector technology companies, media organizations, education ministries, national governments and funding bodies to adopt social media censorship practices, according to newly revealed internal documents.

USAID’s 97-page proprietary “Disinformation Primer,” labeled “for internal use only” was obtained by America First Legal (AFL), a public interest law firm conducting a number of major investigations and lawsuits into the censorship industry and its government partner agencies, after a lengthy process in which the US Department of State failed to respond to a Freedom of Information Act (FOIA) request and was compelled to turn over government documents after AFL succeeded in a formal lawsuit.

The USAID Disinformation Primer reveals a detailed compilation of the agency’s advocacy for some the most economically damaging censorship strategies — many of which had been deployed in America to throttle populists in the 2020 elections and afterwards — and endorses recommendations from some of the most controversial censorship industry institutions under scrutiny by the US Congress.

USAID’s “Disinformation Primer” outlining its censorship promotion strategies is dated February 2021, the first month after the Biden administration took office after the 2020 election. It proposes censorship action items for virtually every governmental, non-governmental and private sector commercial actor across society to both individually and collectively take action against disfavored speech online:

Throttling of Disfavored Information Sources in Favor of Protecting Mainstream Media

USAID’s primer includes a list of recommended approaches for civil society organizations (NGOs, think tanks and non-profits, many of whom function as government cut-outs) and the mainstream media to take action against disfavored online speech. One of USAID’s recommendations openly promotes a well-established tactic of the censorship industry — financial blacklisting. The USAID Disinformation Primer references “advertisers” 31 times in just 97 pages.

Below, for example, is a description for a recommendation calling for “Advertiser Outreach” from the Disinformation Primer, in which USAID encourages the financial throttling of agency-disfavored information outlets (P. 51).

ADVERTISER OUTREACH In order to disrupt the funding and financial incentive to disinform, attention has also turned to the advertising industry, particularly with online advertising. A good example of this is the concerted response to the discovery of the websites traced to the village of Veles outside of Skopje, Macedonia, which showed how easy it was to exploit the digital advertising model to flood the news ecosystem with fabricated content. Wired Magazine profiled the Veles case study in 2017. As most online advertisers are unaware of the disinformation risk of the domains featuring their ads due to the automated process of ad placement, they inadvertently are funding and amplifying platforms that disinform. Thus, cutting this financial support found in the ad-tech space would obstruct disinformation actors from spreading messaging online. Efforts have been made to inform advertisers of their risks, such as the threat to brand safety by being placed next to objectionable content, through conducting research and assessments of online media content. Additionally, with this data, organizations hope to aim to redirect funding to higher-quality news domains, improve regulatory and market environments, and support innovative and sustainable models for increasing revenues and reach.

The USAID’s censorship guidebook characterizes increased online competition and increasing distrust against traditional media as problematic, because it reduces the traditional media’s power to “shape local and national dialogue.”

From the Disinformation Primer, USAID targets citizens who engage in “casting doubt on media”:

Democratic societies rely on journalists, media outlets, and bloggers to help shape local and national dialogue, shine a light on corruption, and provide truthful, accurate information that can inform people and help them make the decisions needed to live and thrive…..

The nature of how people access information is changing along with the information technology boom and the decline of traditional print media. Because traditional information systems are failing, some opinion leaders are casting doubt on media, which, in turn, impacts USAID programming and funding choices.

USAID goes further, calling the decline of traditional media “a loss of information integrity,” presenting the censorship concept of “media literacy” as the solution. From page 15:

“It leads to a loss of information integrity. Online news platforms have disrupted the traditional media landscape. Government officials and journalists are not the sole information gatekeepers anymore. As such, citizens require a new level of information or media literacy to evaluate the veracity of claims made on the internet.”

“Media literacy” is the idea of psychologically training citizens not to trust or access certain types of establishment-disfavored media. FFO previously reported on media literacy efforts in the U.S., particularly its manifestation within the education system. NewsGuard, which offers a media literacy service, has partnered with the 1.7 million strong American Federation of Teachers as a client, and states such as California are passing laws mandating the study of “media literacy” in schools in an effort to pre-bias children against certain information sources.

FFO Executive Director Mike Benz produced a short video primer on the controversial programs under ‘media literacy’ below:

The “Media Literacy” scam is accelerating way too fast & it has to be stopped NOW.

Here’s why: pic.twitter.com/GCr7Q54adF

— Mike Benz (@MikeBenzCyber) November 21, 2023

“Psychological Inoculation”

In addition to media literacy, the censorship industry has produced similar efforts to psychologically influence the public, to preemptively “protect” and psychologically inoculate individuals from digesting disfavored information or redirect them to favorable content.

One such method recommended in the USAID report is Google’s Redirect Method, which is described in the disinformation primer as redirecting users viewing disfavored content to establishment-curated information (p. 46)

The Redirect Method primarily relies on advertising using an online advertising platform such as Google AdWords, targeting tools and algorithms to combat online radicalization that comes from the spread and threat of dangerous, misleading information. The method redirects users through ads who seek to access mis/disinformation online to curated YouTube videos uploaded by individuals around the world that debunk these posts, videos, or website messages. The Redirect Method, a method used to target individuals susceptible to ISIS radicalization via recruiting measures, is being adapted in several countries to combat vaccine hesitancy and hate speech. The method was developed by a collaboration among private and civil society organizations and is documented on The Redirect Method website. The collaboration provides steps in the organization of an online redirect campaign. Other examples of the use of redirection are being employed by groups around the world.

FFO described in a November 2023 report how Jigsaw, Google’s self proclaimed “think/do” tank developed the redirect method as a precursor to a censorship concept known as “prebunking”:

Jigsaw also developed a tool called the “redirect method.” Launched in 2016, the redirect method was an early precursor to the toolkit now known as prebunking. The redirect method identifies when a user is searching for disfavored content, and automatically inserts search results intended to divert their attention to links or material that challenges or undermines the content they wanted to discover.

USAID’s censorship guide proposes a prebunking strategy recommended by influential censorship industry insider Joan Donovan, who formerly led Harvard’s counter-disinformation lab. Donovan, a controversial proponent for US domestic social media censorship is quoted ten (10) times throughout USAID’s Disinformation Primer. I.e., from Page 56:

As a measure to counter disinformation and make debunking more impactful, Donovan recommends prebunking, which she defines as “an offensive strategy that refers to anticipating what disinformation is likely to be repeated by politicians, pundits, and provocateurs during key events and having already prepared a response based on past fact checks.” Prebunking is drawn from inoculation theory, which seeks to explain how an attitude or belief can be protected against persuasion, and people can build up an immunity to mis/disinformation.

USAID’s Disinformation Primer also gives a formal acknowledgment to Dean Jackson, amongst others, for “sharing their time, expertise, and opinions” to produce the government’s censorship recommendations. FFO previously uncovered how Dean Jackson co-led an effort to produce an election censorship blueprint report for the Center for Technology and Democracy (CDT) targeting the speech of US citizens.

Hamilton 68’s Successor

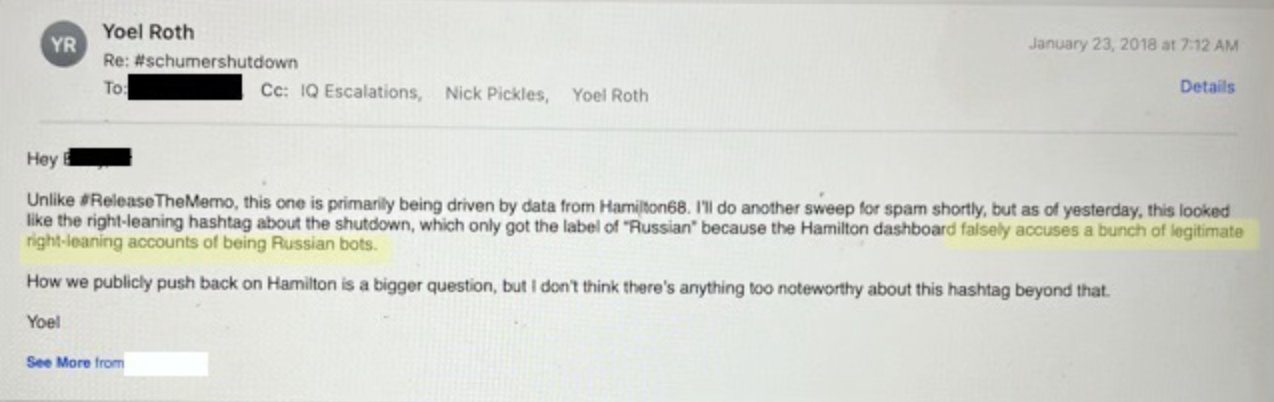

USAID’s Disinformation Primer also suggests various other technological tools for combatting disinformation. Amongst the recommended tools listed is the German Marshall Fund’s Hamilton 2.0 Dashboard, the successor to the controversial Hamilton 68 dashboard.

The Twitter Files, revealing internal conversations amongst Twitter executives, exposed the dubious nature of the Hamilton 68 dashboard, which purportedly tracked narratives and content created by Russian bot accounts. In reality, as revealed by internal emails from former Trust and Safety executive of Twitter Yoel Roth, the dashboard barely caught any Russian accounts in its net. The dashboard labeled authentic accounts of ordinary Americans, Canadians, and British citizens as Russian bots to falsely label domestic populist voices as being Russian influence operations.

The Twitter Files emails show Roth complaining that Hamilton 68, a dashboard developed by the Alliance for Securing Democracy, “falsely accuses a bunch of legitimate right-leaning accounts of being Russian bots.” Denouncing Hamilton 68’s analysis as “bullsh*t,” Roth noted that “Virtually any conclusion drawn from it will take conversations in conservative circles on Twitter and accuse them of being Russian.”

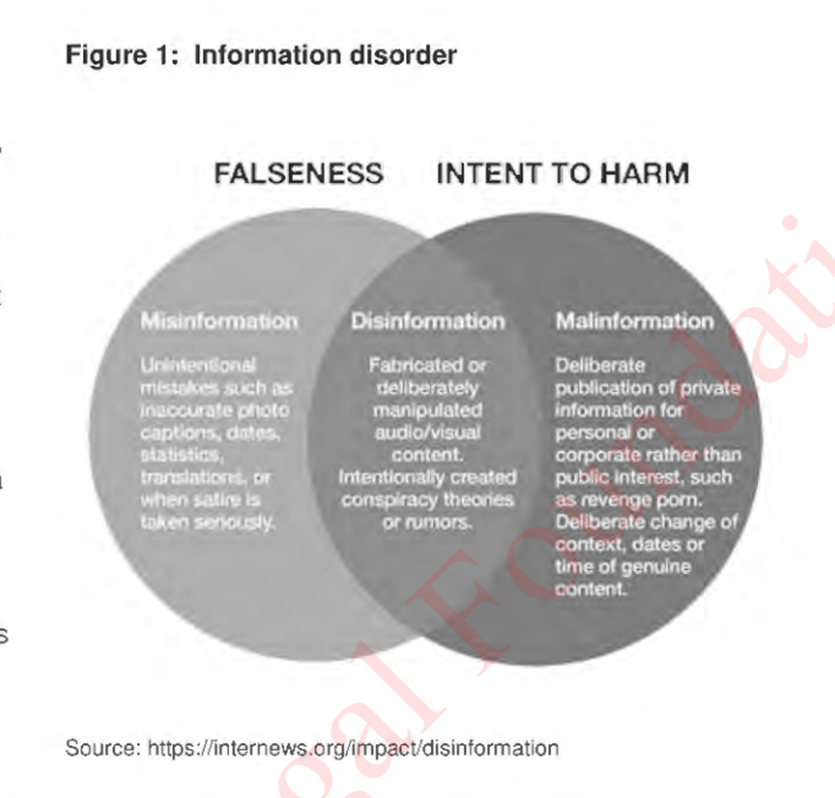

USAID Targets ‘Malinformation’

In addition to mis- (speech that unintentionally causes harm or is inaccurate) and dis-information (speech that is deliberately created to cause harm), the USAID mimics the censorship industry’s proclivity to also target factually true speech under the framing of “mal-information.” Malinformation is speech that is factually accurate, but is deemed wrongthink by the censors because it presents an oppositional narrative.

Page 4 of their Disinformation Primer:

The agency’s definition for “malinformation,” from the USAID Disinformation Primer:

Additionally, “malinformation” is deliberate publication of private information for personal or private interest, as well as the deliberate manipulation of genuine content. This is often done by moving private or revealing information about an individual, taken out of context, into the public sphere.”

According to its own definition, any speech, regardless of factual accuracy and reasoning, can be deemed to have been “taken out of context,” and throttled without much explanation. An ordinary citizen can state their opinion on a politically or socially sensitive topic backed with factual evidence and can be deemed wrong by a government agency that purports to advocate for democracy.

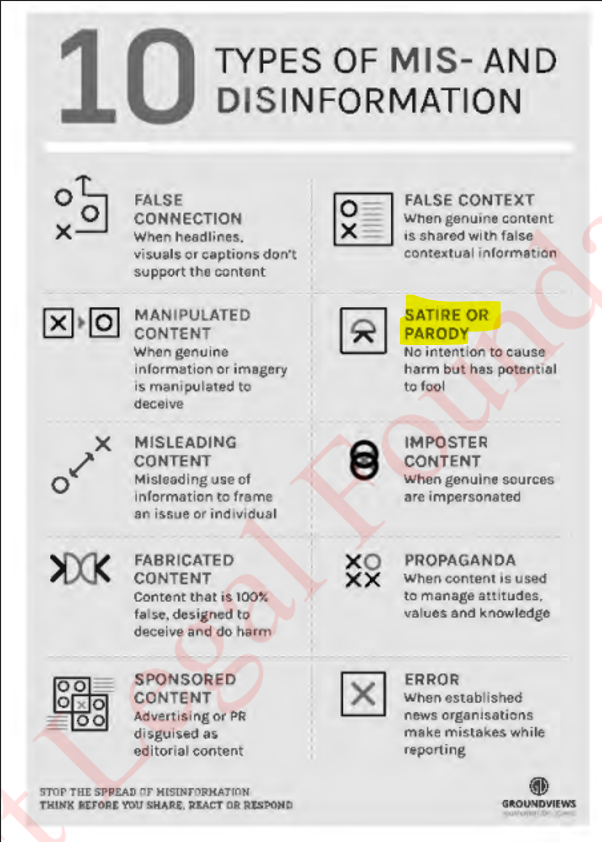

Satire and Parody Deemed Disinformation

USAID’s Disinformation Primer includes “Satire or Parody” in its “Ten Types Of Mis- and Disinformation” compendium:

USAID Targeting Gamers, Pushes Need To Censor “Interpretations Of The World That Differ from ‘Mainstream’ Sources”

In one revealing section from USAID’s Disinformation Primer, the government agency identifies the top disinformation threat as not being “state actors driving the issue” but rather that “problematic information more regularly originates from networks of alternative sites and anonymous individuals who have created their own ‘alt media’ online spaces.” Meaning, USAID sees the key threat as ordinary individuals online forming independent opinions online, not foreign intelligence agencies performing complex influence operations.

USAID goes on to to say “These alternative spaces include message board and digital distribution platforms (e.g., Reddit, 4chan, or Discord)… and gaming sites.”

USAID bemoans that such gaming sites and message boards allow ordinary citizens to to form “interpretations of the world that differ from ‘mainstream’ sources. With this, individuals contribute their own ‘research’ to the larger discussion, collectively reviewing and validating each other to create a populist expertise that justifies, shapes and supporters their alternative views.”

From p. 37 of the USAID censorship proposal report:

For more information on USAID’s elaborate role in funding and coordinating Internet censorship practices, see this FFO deep dive report from July 2022.