The Stanford Internet Observatory’s in-house podcast, Moderated Content, called its first-ever “emergency edition” the week Elon Musk completed his acquisition of Twitter in October 2022. Moderated Content draws its name from the euphemistically named professional field of removing speech off the Internet known as “Content Moderation,” in which “moderation” is the palatable word used to refer to takedowns or throttling of social media posts.

The Stanford Internet Observatory (via its parent, the Stanford Cyber Policy Center) is so prolific in the censorship space that it has its own weekly podcast discussing topics of interest for those who take down speech online for a living. The Stanford Internet Observatory (which FFO has reported on here, here and here) is perhaps the top university center at the heart of Congressional investigations and multiple lawsuits (see here, here and here) into online censorship. The center received a joint $3 million government grant from the National Science Foundation in 2021, lasting through 2026, for such troubling technocratic activities as top-down controlling rumors online and rapid-response techniques to throttle emerging social media narratives.

In its Musk-focused “emergency” episode in October 2022, the Stanford censorship center’s founder and head, Alex Stamos, openly mused about the “hellish existence” he predicted that Elon Musk, as X’s new owner, would now have, owing to pressure from censorship professionals and their government allies around the world:

My top line takeaway is Elon Musk has bought himself into a hellish existence. He has made an incredibly bad decision by making himself personally responsible for moderation of Twitter in a way that no human being has been responsible publicly, at least for content moderation on any major platform. And he has made a serious kind of business CEO level mistake that is putting most of his net worth, which is invested in Tesla and all the other Tesla shareholders, in jeopardy.

Throughout his remarks on the podcast, Stamos expressed hopes that pressure from Tesla investors and pressure from international governments could be used to punish Musk for re-establishing free speech on Twitter/X.

My top line takeaway is Elon Musk has bought himself into a hellish existence. He has made an incredibly bad decision by making himself personally responsible for moderation of Twitter in a way that no human being has been responsible publicly, at least for content moderation on any major platform. And he has made a serious kind of business CEO level mistake that is putting most of his net worth, which is invested in Tesla and all the other Tesla shareholders, in jeopardy.

Stamos also reiterated his view that Tesla shareholders and threats to the Tesla share price could secure Musk’s obedience to censorship demands. Indeed, Stamos and his co-host invoked “Tesla” 14 times in talking about the governments might be able to use Musk’s commercial vulnerability with Tesla against him to install Twitter speech controls. At one point, Stamos singled out Tesla’s Giga Factory presence in Germany:

The really hard thing for Musk, and this is where I’m saying I think this is a huge strategic mistake for him, and the people who should be really angry today are Tesla shareholders because most of Musk’s net worth is tied up in Tesla shares. Tesla is a company that actually makes stuff that has to follow the law to ship products around the world and has huge exposure to all of these different regions that want to control the internet. That is, as you and I talk about all the time, the number one fight over the next 10 years is going to be who gets to control what speech is allowed online, and every government outside of the US with our First Amendment wants to do that. And even there’s people in the US who want to, there’s restrictions on that, but around the world, those restrictions generally don’t exist.

So Musk has done something that is unprecedented here, which he has said, “I am personally responsible for content moderation on this platform. And by the way, most of my money is in a car company that makes and ships cars in all of these jurisdictions.” Tesla has a factory in Berlin. Berlin, the headquarters of European content moderation with Germany and NetzDG.

Just one month ago, Elon Musk visited that Giga Factory in Berlin, calling the plant “The Jewel Of Germany”:

At Giga Berlin today to congratulate the team on their excellent progress.

The factory looks amazing!

We are going to cover all the concrete with art.

— Elon Musk (@elonmusk) November 3, 2023

Giga Berlin – Das Juwel Deutschlands 🇩🇪

— Elon Musk (@elonmusk) November 3, 2023

Stamos’s curious singling out of Musks’s vulnerability in Germany dovetails with an observation FFO Executive Director Mike Benz has repeatedly made about the “Transatlantic Flank Attack,” wherein US State Department partners have assiduously worked for six years with European counterparts across the Atlantic to create blanketing censorship standards on both sides of the Atlantic, starting in Germany in 2017.

They are trying “Transatlantic Flank Attack” 2.0

This is a redux of the August 2017 Germany NetzDG law that in many respects started the era of modern Internet censorship

It was US-UK diplomats & foreign policy blob stakeholders who pushed it then, and who again push it now https://t.co/FFxqYtnTjo

— Mike Benz (@MikeBenzCyber) June 23, 2023

For more on Germany and NetzDG’s role in ushering in the modern age of Western world censorship, see this primer video.

During the October 2022 Moderated Content podcast, Stamos’ co-host, Evelyn Douek, seemingly rejoiced at the European Union’s response to Musk’s free speech commitment with threats of government regulatory enforcement for censorship of speech. Douek said a video of Musk shaking hands with pro-censorship EU commissioner Thierry Breton looked like a “hostage video.”

So overseas lawmakers are also having fun with this. The EU’s Thierry Breton tweeted a wavy hand, “Hi, Elon Musk” in Europe. This is [inaudible 00:17:36] tweeting Elon Musk’s “The bird is free” tweet. “In Europe, the bird will fly by our rules. #DSA.” Of course, he’s referring to the massive regulatory package, the Digital Services Act, which has just been passed in the EU that has all of these obligations for very large online platforms, [inaudible 00:17:53] like Twitter that Musk is going to have to comply with.

There was this really funny interaction. When Musk first announced that he was buying Twitter, he made all of his tweets about being a free speech platform. And then a couple of days later, they release this video of him standing with Thierry Breton shaking hands saying, “Of course, of course, we will comply with EU law.” It kind of looked like this weird hostage video of him having to stand there saying, “Yes, we will comply with EU law.” And Thierry Breton re-upped that video yesterday. So again, Musk isn’t operating in the same legal environment that Jack Dorsey was 10, 15 years ago.

One of Stamos’ major concerns upon Musk’s takeover was the possibility that the censorship industry’s network of “disinformation researchers” might lose access to the platform’s API. Access to the API is important for the censorship industry, allowing them to analyze mass quantities of posts and accounts, identify networks of users, and target them for censorship.

I’m extremely worried. And I’m worried about what happens at Twitter, but I’m especially worried about the long-term impact that has on the entire industry. Like you said, Twitter was a very early leader here of having an API that allowed for researcher access.

That being said, Twitter went out there and, to give them credit, opened up an API, worked with researchers. Our group has a researcher agreement with Twitter in which we’re allowed to have free access to an API that they otherwise sell. In return, we have to follow some privacy rules and stuff. But those rules are completely neutral and they have no restrictions on what we say about Twitter. I have a real fear that both the contracts go away and then eventually the APIs go away.

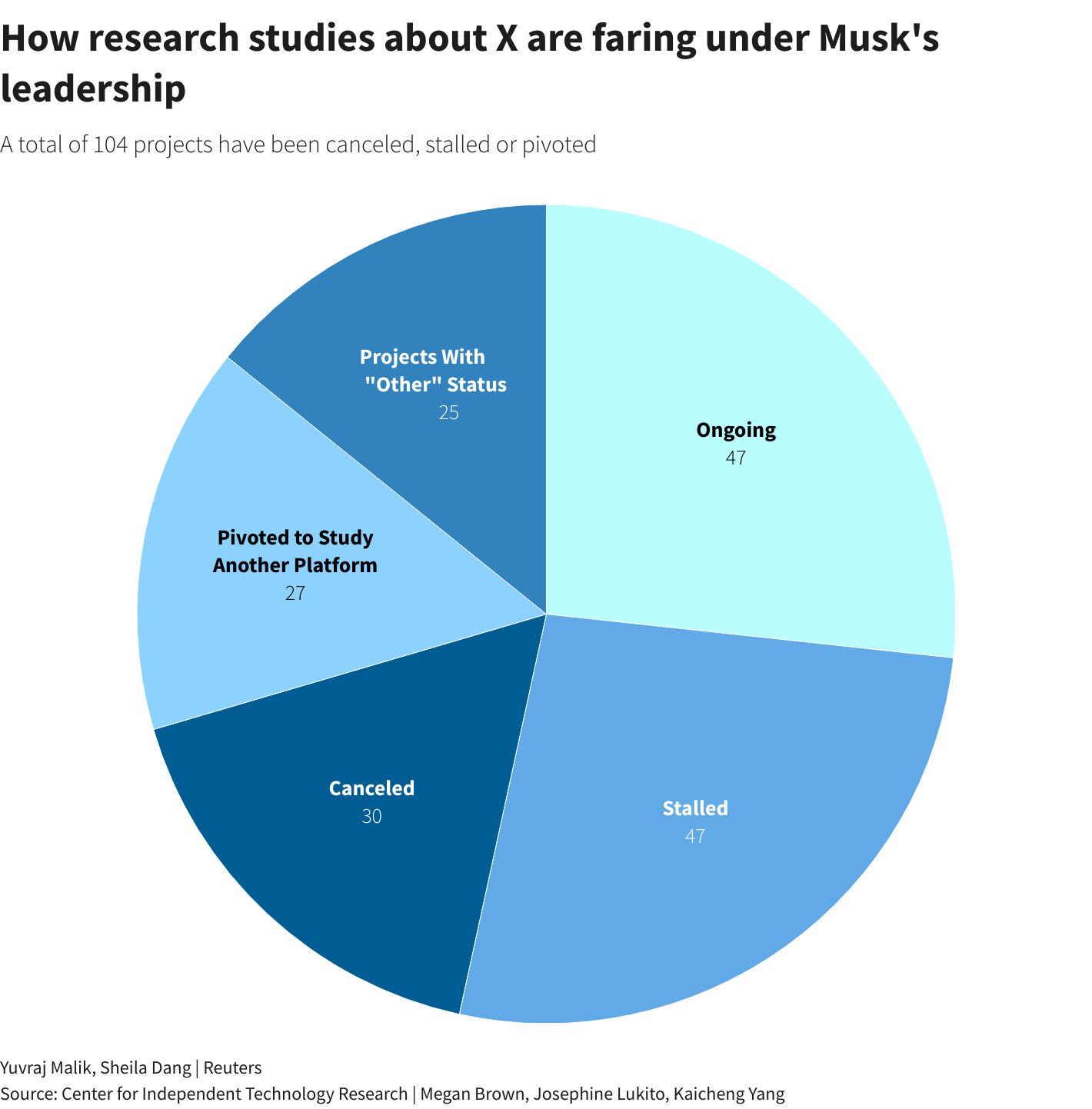

Stamos’ fears in this regard were borne out: under Musk, X did end free academic access to the API, resulting in a major setback for the censorship industry. A survey recently released by Reuters found that over 100 studies, including research on classic censorship pretexts like “disinformation” and “hate speech,” were stalled, cancelled, or had to switch focus to other platforms in the wake of the API restriction.

From Reuters:

The survey showed 30 canceled projects, 47 stalled projects and 27 where researchers changed platforms. It also revealed 47 ongoing projects, though some researchers noted that their ability to collect fresh data would be limited.

The affected studies include research on hate speech and topics that have garnered global regulatory scrutiny.

The reduced ability to study the platform “makes users on (X) vulnerable to more hate speech, more misinformation and more disinformation,” said Josephine Lukito, an assistant professor at the University of Texas at Austin.

The Stanford censorship professional network’s dismay over losing access to the raw data that goes into the “AI Censorship Death Star” used to make complex speech management schemes on platforms appears to be a complete validation of Elon Musk’s decision in July 2023 to limit the ability to exploit Twitter data for such purposes. For more on the censorship superweapons Stanford sought not to lose, see this primer video (embedded at 7 million views below).

Wow. Musk has no idea the DARPA rattlesnake he just stepped on by this doing this…

My take on @elonmusk’s new rate limit policy, from the lens of the censorship industry: https://t.co/AZ5SPIgHiz pic.twitter.com/Rw4KBZsT9O

— Mike Benz (@MikeBenzCyber) July 1, 2023

Michael Benz is the Executive Director of the Foundation for Freedom Online. Previously, Mr. Benz served as Deputy Assistant Secretary for International Communications and Information Technology at the U.S. Department of State. Follow him on Twitter @FFO_Freedom.